Ambisonic for Virtual Reality and 360° Soundfield

Content

Why Ambisonics?

Actually, this audio format is about 40 years old and has barely established itself. Ambisonic audio is great, but also pretty complicated at first. One of the problem is that the sound usually has to be encoded into the ambisonics room and decoded for playback. In addition, the sweet spot is very small for speaker arrays.

However, since 360° videos are played back almost exclusively via headphones with VR headsets, the necessary decoders can already be implemented in the software of the video players. A binaural stereo audio signal is calculated in real time from the ambisonics signal via the HRTF (Head-Related Transfer Function), an output which changes depending on the viewer’s point of view inside the sphere.

Ambisonics captures full directivity information, including height, unlike conventional stereo and surround sound formats, which are based on panning audio signals to specific speakers. This makes ambisonics ideal for immersive audio applications.

Unlike conventional stereo, ambisonics provides a complete three-dimensional soundfield.

Before delving into Ambisonics, it’s essential to understand its precursor and distant “cousin” – the Mid-Side technique. Mid-Side is a widely used stereo recording method that involves a cardioid microphone capturing sounds from the front (Mid) and a figure of eight microphone capturing sounds from the sides (Side). This technique forms the foundation for understanding the concepts of spatial audio and encoding sound information, which are fundamental aspects of Ambisonics.

Ambisonic Recordings

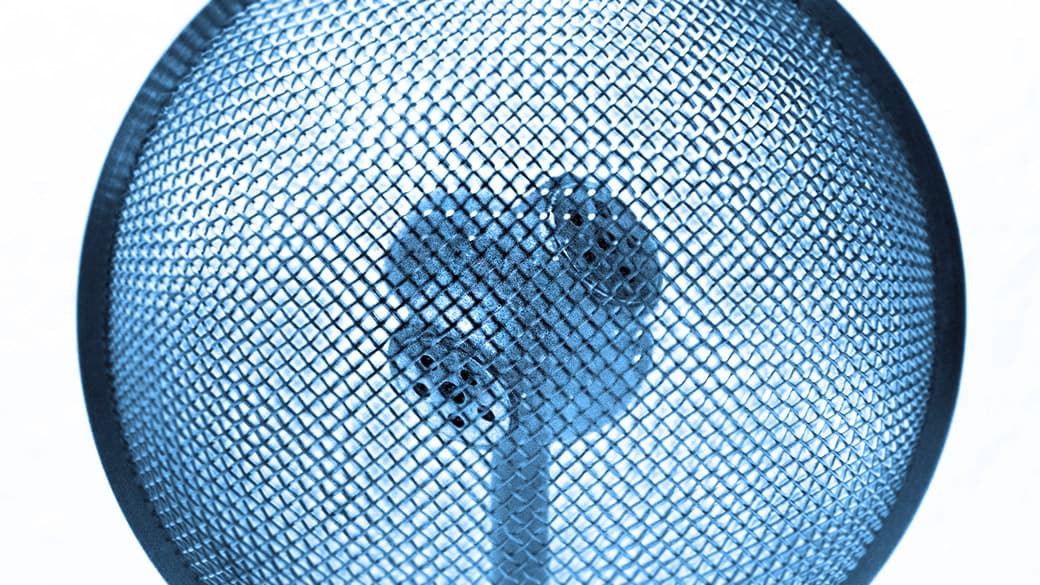

For an Ambisonics microphone, a compact tetrahedron arrangement with four cardioid microphone capsules showing as a spherical shape in all directions (see picture above) has proven its worth. This signal is recorded by a four track recorder in conjunction with a SoundField microphone with four capsules arranged in a tetrahedral array, which should have as little self-noise as possible and digital gain control to ensure identical preamplification of all microphones.

The sound field is captured in ambisonic recordings, including height information and the full 360° around the microphone, allowing for the creation of immersive 3D soundscapes.

Due to only using 4 audio channels, its spatial resolution is not very high. Recorders and microphones are placed as close as possible to the 360° camera since the 360 degree camera tripod is retouched from the image and with it the sound equipment.

Ambisonic recordings are referred to as A Format and must be transferred later in post-production to the B format by an encoder plugin in order to be processed further.

In the following, we will present some relevant Ambisonics microphones that can be used for VR productions. Spoiler: There is no perfect ambisonic microphone

Soundfield Ambisonic Microphones

Soundfield offers three high-quality Ambisonic microphones on the market that are in the higher price segment. For VR productions, these microphones might be too big in some cases, as they are usually hidden under the camera. A more handy alternative is the newer Røde NT-SF1, which was developed in collaboration between Røde and Soundfield. The Ambisonic format captures and reproduces a complete three-dimensional soundfield, providing greater spatial resolution.

Sennheiser Ambeo VR Microphone

The Sennheiser Ambeo® VR microphone also offers a good balance between size and sound quality. The Ambeo produces what is known as A-format, a raw 4-channel file that must be converted to Ambisonics b format. Sennheiser provides the appropriate software for this purpose. Together with Dear Reality, Sennheiser offers a plug-in that converts the audio signals from A-format to B-format and rotates it directly.

Zoom H3-VR (Virtual Reality)

For smaller productions, the Zoom H3-VR recorder is a relatively inexpensive and practical solution. Unlike the other ambisonic microphones, this one already has a recorder built in, which can speed up the workflow considerably. In addition, Zoom offers a standalone software for audio editing. This allows the ambisonic files to be converted to stereo, binaural audio or 5.1, for example, without having to use a DAW.

Eigenmike

An interesting system with a different approach is the MH Acoustics Eigenmike. With this microphone it is possible to record both FOA and Higher Order Ambisonics. The microphone contains a large number of individual microphone capsules. However, it is not only available at a very high price point, but also presents a challenge to achieve a good level of sound quality with such a complex system.

Post-Production

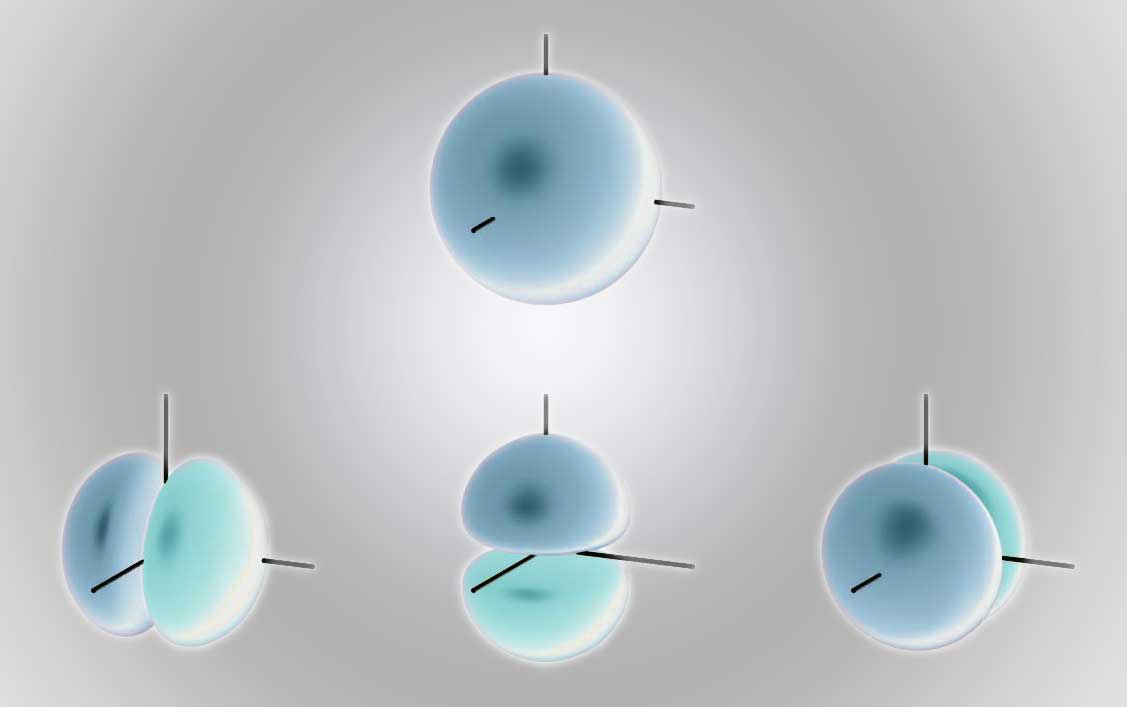

The audio signal keeps its four channels when converting from A to B format, but instead of the microphone capsules 1, 2, 3 and 4, the signals in B format are now converted to W, X, Y and Z channels. X, Y, and Z are spatial axes (polar patterns), while the W channel is a compatible mono signal that contains all signal components and is therefore omnidirectional. The following image illustrates the arrangement of the described channels. Mathematicians also calls this kind of arrangement Spherical harmonics. You can think of it as mid-side stereophony, only with three side signals instead of one.

The four-channel resolution is relatively diffuse but is particularly suitable for ambiances and original tones, which would have to be created time-consumingly in post-production by foleys and placed in 3D audio space. Since the ambisonic recording can be adopted as it is from the set and the head-tracking already works, it offers a good starting point for the spatial sound mix for sound designers. Surround tracks can be positioned and moved using Ambisonic mixing tools to create a complete sphere of sound for an immersive experience.

Ambisonics sound formats

There are two major spatial audio formats used for VR. The first is the open AmbiX format, which is compatible with YouTube, Facebook, Samsung, Oculus, and many other VR players. The second format is called Ambisonics format Two Big Ears (.tbe). It was developed by the Two Big Ears company, which was later bought by Facebook.

When designing sound for VR movies in digital audio workstations (DAW), the B format is often used. Here it is important that the DAW has at least 4-channel tracks. Standard DAWs such as ProTools, Logic or Audition support mixing sound for videos, but they are not compatible with VR videos. Additional plugins are needed for this, for example the free Facebook 360 Spatial Workstation (FB360) plugin. With this program, VR videos can be played back in the DAW and spatial audio for VR can be generated.

Surround Sound applications and Spatial Audio

Surround sound enthusiasts will find a compelling advantage in Ambisonics. Unlike most immersive audio or traditional surround sound formats, which are hemispherical and primarily focus on left-right sound projection. Ambisonics offers spherical audio projection, even incorporating height information “from below” compared to technologies like Dolby Atmos. This is an interesting benefit for sound reproduction, but later more on that. Ambisonics can be easily mapped to any speaker array, from stereo to multi-channel 3D surround formats, due to its unprecedented sonic accuracy and sound reproduction capabilities.

Platforms for VR content

As the fan base continues to grow, so does the number of platforms on which VR content is made available. However, many of these platforms have demanding content requirements and are not always easily accessible to VR creators.

The two leading platforms that allow immersive video content such as VR movies to be uploaded are YouTube 360 and Facebook 360, both of which have uploaded tens of thousands of VR videos. YouTube 360 supports First Order Ambisonics in b format signal, while on Facebook 360 also allows tbe content with optional head-locked stereo track. The corresponding audio formats can be exported with the FB360 encoder to ensure correct display on the respective platform.

Advantages / Disadvantages

First-order ambisonics is an extremely useful way to create spatial sound for VR movies. However, it also has some limitations. The spatial resolution of sound design the B-format is limited and cannot always be reproduced perfectly, especially when recording with Ambisonics microphones in reverberant environments or when the microphone is far away from the sound source. The Ambisonic format is capable of rendering sounds above and below the listener, providing a complete sphere of sound.

In such cases, the sound may appear diffuse. Using computer-generated Ambisonics audio that simulates HRTF (Head-Related Transfer Function) can also lead to localization errors. One possible solution is to place the Ambisonics microphone closer to the sound source, as is common in conventional movies. However, this has the disadvantage that the microphone is visible in a 360° shot. On the other hand, spatial perception can be distorted if the microphone is far away from the 360° camera, since the sound sources then no longer correspond to the visual positions.

Advantages

- Ambisonics is spherical, so it can also project height information “from below” direction, unlike most surround or immersive audio formats, which are hemispherical.

- Patents have already expired, so the technology is virtually free.

- Ambisonics is arbitrarily expandable by higher orders with more channels, the other way round no extra downmix is necessary, e.g. to get from 16 channels to 4; only the resolution is reduced.

- With First Order Ambisonics Ambix (ACN, SN3D) a standard format is just establishing itself. (e.g. on YouTube)

Disadvantages

- Cannot be played back correctly without a decoder and the same audio may sound differently on different platforms.

- Low compatibility for static stereo sounds (e. g. music) -> workaround required

- Ambisonics is scene-based, so has limited possibilities to move away from the camera position, which means it’s less suited for interactive VR-experiences.

- Higher-order Ambisonics helps for a better localization, but requires a high bitrate and does not really solve the problem of being limited to a number of audio tracks.

Additional thoughts on Ambisonic Audio

While Ambisonics has a rich history and proven worth in immersive audio applications, recent developments are pushing its boundaries further. Innovations in hardware and software are enhancing its integration into VR and 360° environments.

Hardware Advancements

New generations of ambisonic microphones, like the Zylia ZM-1, feature multiple capsules for higher-order Ambisonic recordings, providing better spatial resolution. This user-friendly and cost-effective microphone makes high-quality ambisonic recording accessible to both professional audio engineers and hobbyists.

Software Enhancements

Advancements in real-time processing and encoding simplify workflows for Ambisonic audio. Tools like Reaper and its Ambisonic Toolkit (ATK) offer powerful solutions for spatial audio production. Game engines such as Unity and Unreal Engine now support Ambisonics, enabling interactive and dynamic audio environments in VR applications.

Use in Interactive Experiences

Ambisonics enhances interactive VR environments, such as video games and virtual tours, by adapting the sound field in real-time as users move through these spaces. This dynamic adaptation maintains immersion and realism, crucial for a seamless auditory experience.

But this only works for rather linear sound field applications. As soon as the three-dimensional space is supposed to non-linear or has six degrees of freedom the entire mix needs to be reconsidered.

Educational and Training Applications

Ambisonics is also utilized in education and training. Virtual simulations, such as medical training environments or flight simulators, use Ambisonic audio to create authentic soundscapes, improving learning outcomes and situational awareness.

Future Prospects

The future of Ambisonics is promising, with ongoing research focusing on increasing spatial resolution and improving decoding algorithms. Emerging technologies like machine learning are being explored to enhance HRTF customization for more personalized spatial audio experiences. New codecs and compression techniques will enable higher-order Ambisonic audio to be efficiently streamed, broadening its accessibility and applications.

Discover More Audio FormatsIn conclusion, Ambisonics is continuously evolving, with hardware and software advancements expanding its potential. From interactive VR experiences to advanced training simulations, Ambisonics will play a crucial role in the future of immersive audio, offering unparalleled realism and immersion in virtual and augmented realities.