How Do We Localize Binaural 3D Sound?

Content

To enjoy 3D audio, you need spatial hearing. Spatial what? OK, let’s take it one step at a time:

Over the past few years, I have repeatedly supervised bachelor’s and master’s theses or given expert interviews. As a basis for their work in 3D audio, almost all students have written a chapter on spatial listening and binaural recording. To make it easier for the next generation, I thought it would be useful to collect the knowledge here. Even if it is partly quite scientific, I want to make the topic understandable for non-audio people. But admittedly, it seems quite theoretical at first sight.

But for motivation: our brain manages the whole thing with only two ears, a fascinatingly shaped pinna, and complex processes in the brain. This helps us in everyday life, for example, to experience the fascination of live music or even to be aware of dangers that we cannot see. Already exciting!

Introduction to Binaural Audio

Binaural audio is a revolutionary sound technology that provides a more immersive and realistic listening experience. By using two microphones to capture sound from two different locations, binaural audio creates a 3D audio experience that simulates the way humans hear the world. This technology has been around since the 1800s, but it has gained popularity in recent years due to its applications in virtual reality, gaming, and music production.

The word “binaural” means “two ears,” and that’s precisely how binaural sound works. By placing two microphones in the same position as the human ears, binaural audio captures sound in the same way that our ears do. This allows listeners to pinpoint the location of sounds in space, creating a more immersive and engaging experience.

Binaural audio is not the same as surround sound, which is a minimum of 6 discrete channels. Binaural recordings are just stereo left and right channels, but they are recorded in a way that mimics the way humans hear. This makes binaural audio a unique and powerful tool for creating immersive audio experiences.

In the next section, we’ll explore how binaural audio works and how it’s used in various applications. We’ll also discuss the benefits of binaural audio and how it’s changing the way we experience sound.

Spatial hearing – what is it?

Spatial hearing describes the ability of the human auditory system to perceive the environment in three dimensions. Our sense of hearing or, more precisely, our auditory perception is fundamentally composed of the following steps:

- the mechanical processing of sound

- sound reception

- the neuronal conversion via the auditory pathway and finally

- cognitive processing in the brain

Sound waves enter the outer ear, travel through the ear canal, and impact the eardrum, thereby initiating the sound localization process critical for determining the direction of sound sources.

The perception of sound through two ears is also known as binaural hearing and equips us with the ability to locate sound and hear direction.

For 3D audio, two audio channels are enough in the smallest case for binaural audio

Even if the vernacular likes to claim otherwise – two ears are actually enough to perceive sound even behind us, for example, 3D. That’s why I’m such a big fan of headphones3D, the aforementioned binaural sound. But how can our brains do that without technical aids like hearing aids? Using stereo headphones is essential to fully appreciate the realistic soundscapes created by binaural recordings, enhancing immersive experiences in virtual reality, gaming, and health and wellness applications.

The levels of hearing

What we hear is localized by comparing the direction and distance of sound at the left and right ear and interpreted by our brain.

The head related transfer function (HRTF) plays a crucial role in this process by acting as a unique filter that characterizes how sounds are affected by the listener’s physical structure, enabling spatial awareness in binaural audio experiences.

To better define and map the description of such auditory events, the three-dimensional space is transferred to a head-related coordinate system and divided into three individual planes. These map two dimensions each of the X, Y and Z axis:

- The horizontal plane: Is located horizontal and represents the signal paths right, left, back and front.

- The frontal plane: Runs around the ears and neck and defines the left, right, bottom and top.

- The median plane: Has a course over the nose, the neck as well as the back of the head and stands for the directions behind, in front, below and above.

However, localization does not only occur according to different characteristics of the auditory system and the associated signal processing of the brain. It is rather a combination of different localization mechanisms, which enable one to indicate the sound source directionally accurate and fast.

Using the polar coordinates azimuth, elevation and distance, a localized sound event can be applied to a mathematical model and can be mapped in a coordinate system with X-, Y- and Z-axis. In order to achieve an approximately exact localization of the sound event, this must take place binaurally, i.e. with two ears. This shows that localization on the horizontal and vertical planes works in different ways.

How does localization work on the horizontal plane?

In the horizontal plane, delay differences between the arrival of the signal and frequency-dependent level differences of the left and right ears provide for the localization of the auditory event. In natural hearing, there are interaural delay differences as well as frequency-dependent interaural level differences, more about this later.

How does localization work on the vertical plane?

On the vertical plane, the so-called median plane, which is located on the symmetry plane of the head, the localization is not done by time-of-flight or level differences, but with the help of timbre differences, which refer to the timbre of the line of sight from the incoming signal and are determined by the nature of the head and ears. Thus, directional frequency bands are raised according to the direction of incidence.

The definition of sound

It is necessary to consider and define the component of sound from several perspectives. Physically defined, sound is an oscillation in pressure, stress, particle displacement, particle velocity, etc., propagating in a medium with internal forces (e.g., elastic or viscous), or the superposition of such a propagating oscillation. The sensation of hearing is caused by the vibration described. Techniques used in recording sound, such as binaural recording, have evolved to capture 3D audio experiences, enhancing the quality and realism of sound reproduction in immersive applications like music and films.

Sound in physics

Sound can therefore be regarded as mechanical oscillations that propagate in the form of waves (sound waves) in an elastic medium (e.g., air or water) and is the objective cause of hearing. In contrast to liquid and gaseous media, where sound propagates only in the form of longitudinal waves, transverse waves are also present in solids.

Colloquially, sound refers primarily to the noise, the tone, or the bang as it can be perceived auditorily by humans and animals with the auditory system. However, the sound should also be understood as the sensation perceived by the ear.

Sound can not only be heard but also felt

In summary, there are several definitions that apply to the phenomenon of sound. While the technical approach illustrates in a very visual way what happens when we perceive sound (a sound wave vibrates in a medium), sound can also be considered as a sensory stimulus of our auditory organ.

This in turn triggers emotions and other stimuli. Tones, sounds, noises, etc. can therefore not only be heard but also felt or sensed. Both ways of looking at sound are important for the basic understanding of sound and how it is perceived by human hearing.

Basics of room acoustics

Every room has acoustic properties and information, as well as effects on a sound event that takes place in it. Some of these can be used as an advantage and others must be minimized to get a clean recording of a concert or movie dialogue. In other words, a large church hall may not be the best place to record a fast and loud spoken dialogue because of the amount of reverberation and reflections.

On the other hand, a recording of a chorus in an acoustically dry studio space doesn’t get that epic sonic character from natural room reflections. Accurate audio recording in different environments is crucial to capture the intended sound quality and spatial acoustics.

To get an idea of how sound propagates in an enclosed space, the graph below depicts the behavior of direct sound, early reflections, and absorption.

So it is not only the already mentioned difference of the direct sound in terms of time, sound pressure, etc. that is decisive. Especially when mixing with 3D audio, I notice how important the reflections of a room are. With the corresponding initial reflections and reverberation. The DearVR tool has about this combination of 3D localization and corresponding reverb.

How is direct sound created?

A sound source in an enclosed space radiates sound waves in many different directions. These sound waves reach the listening location in different ways. Direct sound is defined as those sound waves that take the shortest path between the sound source and the listener’s point of origin.

How are reflections and diffuse sound formed?

If the sound wave from a source is reflected by one or more surfaces before it reaches the listener, this is known as reflection. The so-called early reflections or early reflections are those that are reflected from the closest room boundaries. If these reflections follow each other so closely that they can no longer be perceived as individual reflections, we speak of diffuse sound.

When do we talk about absorption?

In addition to reflections, absorption of sound also occurs, depending on the surface. If a surface is hard as sound (as the name implies, even with hard materials such as stone, which does not resonate), relatively little energy is extracted when sound strikes it. If the absorption coefficient of a surface is high (e.g. sofas, curtains), a large part of the acoustic energy is converted into thermal energy.

Energy is thus extracted from the sound. While glass or concrete, for example, has a low absorption coefficient, it is very high for carpets or foam materials. The absorption coefficient describes the ratio between the absorbed energy and the energy of the incident sound wave and indicates how good the absorption capacity of a material is.

What influence does the reverberation time have?

In an enclosed space, a sound event is reflected several times before it finally reaches such a low level that it is no longer perceptible. The reverberation time, therefore, describes the time in which the sound pressure level (SPL) of the reverberation has dropped 60 dB below the original sound pressure. This is why the reverberation time is also referred to as RT60 (Reverberation Time).

It plays an essential role in the sound character of a room. Speakers and vocal booths have a maximum reverberation time of about 0.3 seconds. This ensures that the direct sound of a recording is as undistorted as possible.

On the other extreme, locations such as large churches are often used for recordings in order to benefit from room characteristics such as reverberation time and to give the recording a certain sound. Here, the reverberation time can be several seconds long. Reverberation time, therefore, plays a very important role in microphone recordings and should always be taken into account in order to achieve qualitative results.

Basics of psychoacoustics and psychoacoustic effects.

Psychoacoustics is a science in itself. In fact, phenomena happen in our heads during sound processing that we cannot explain with physics. Actually, one would have to write a separate article for this. But here’s a brief introduction to spatial hearing and 3D audio. The phenomenon of autonomous sensory meridian response (ASMR) is also related to psychoacoustics, where binaural audio recordings can trigger tingling sensations and are often used for relaxation, studying, and sleep.

How does the human ear work?

The human ear, as in other mammals, contains sensory organs that perform two very different functions: hearing and the postural balance and coordination of head and eye movements.

Anatomically, the ear consists of three distinguishable parts: the outer, middle, and inner ear. The outer ear consists of the visible part, the pinna (helix), which protrudes from the side of the head, and the short external auditory canal, the inner end of which is closed by the eardrum (tympanic membrane).

The function of the outer ear is to collect sound waves and direct them to the eardrum. The middle ear is a narrow, air-filled cavity in the temporal bone. It is spanned by a chain of three tiny bones – the malleus (hammer), the incus (anvil), and the stapes (stirrup), collectively called the ossicles. This chain of ossicles (auditory ossicles) conducts sound from the eardrum to the inner ear, known since Galen (2nd century AD) as the labyrinth. It is an intricate system of fluid-filled passages and cavities deep inside the temporal bone. Binaural audio recordings are made using a dummy head, where microphones are placed in the ears of the dummy head to capture sound as it would be heard by human ears.

The inner ear consists of two functional units: the vestibular apparatus, consisting of the vestibule and arcuate passages, which contains the sensory organs of postural balance; and the snail shell-like cochlea, which contains the sensory organ of hearing. These sensory organs are highly specialized endings of the eighth cranial nerve, also called the vestibulocochlear nerve.

Thus, the outer and middle ear basically serve to mechanically pick up, filter, and amplify the sound that is received through the pinna. However, the transmission of sound waves to our brain is handled by over 18,000 hairs, which are covered with fluid and located in the cochlea in the inner ear.

Psychoacoustic effects

The blog post Personalized 3D Audio - The Holy Grail called HRTF discusses how spatial hearing is possible for personalized 3D audio. But, although the structure and function of the human auditory system have been extensively researched, perceptual processing in the brain remains a topic that has not yet been conclusively elucidated.

Various models and theories based on experiments and observations of listening, of subjects have led to several findings in the area of spatial masking effects and the perception of music and speech.

What is Duplex Theory?

The Duplex Theory, developed by Lord Rayleigh (1907), describes the ability of humans to localize sound events using Interaural Time Differences (ITDs) and Interaural Level Differences (ILDs) between the left and right ear.

Binaural microphones are essential in capturing sound for accurate localization, as they mimic the human ear’s natural hearing process.

What is the interaural time difference (ITD)?

The interaural travel time difference results from the spatial separation of the ears by the head. The spatial separation causes directional differences in the path lengths that sound must travel from the source to reach each ear. Different arrival times of sound at the two ears subsequently cause travel time differences. The maximum time difference between the left and right ear is 0.63 ms, which corresponds to the size of a human head (about 17-20 cm). ITDs are mainly effective in a range below 1200 Hz.

For spatial listening in 3D audio, interaural delay differences are the most important features for localizing sound events or sources.

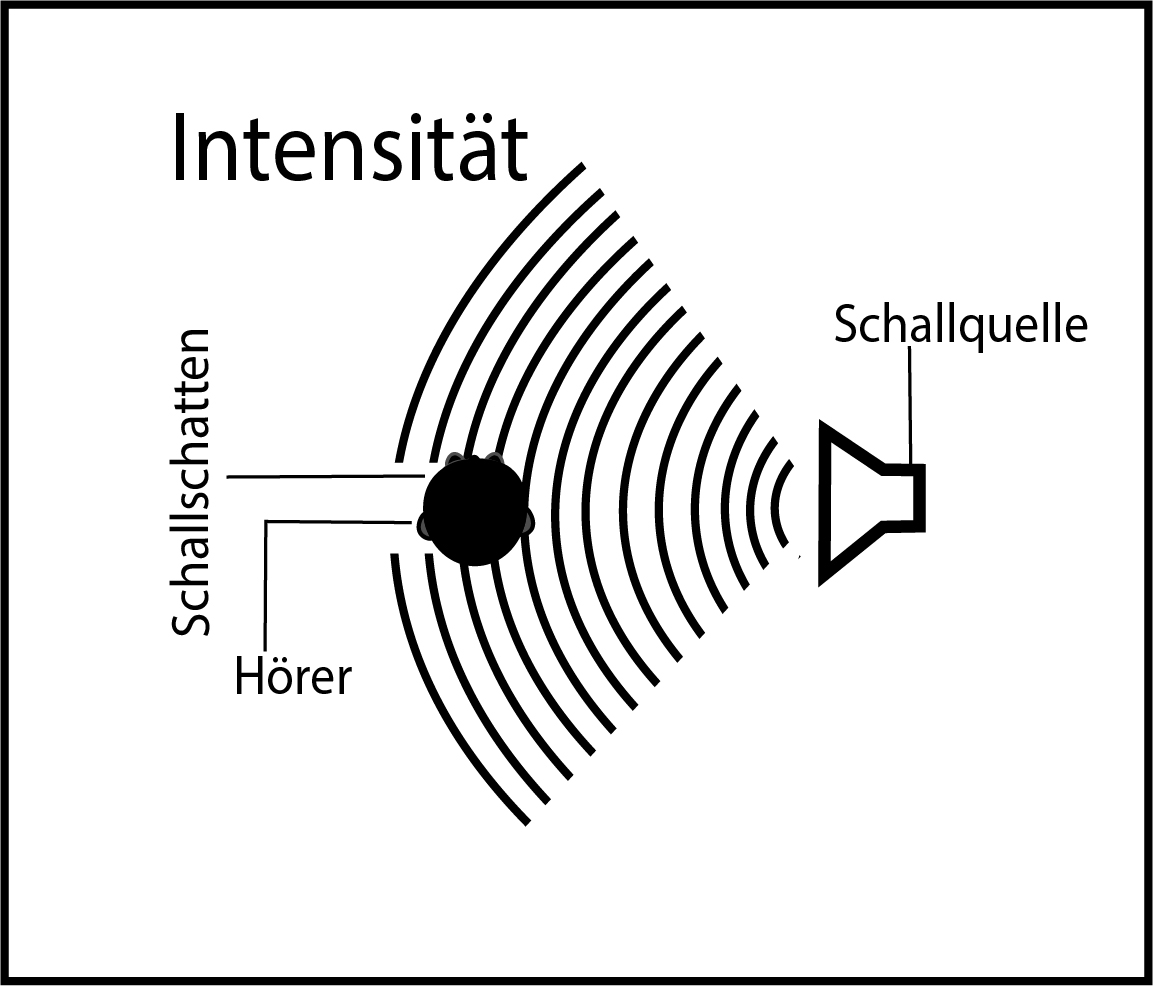

What is the interaural level difference (ILD)?

As mentioned earlier, interaural level differences are produced by a shadowing effect of sound by the human head. If there is a sound source on the right, the left ear is shadowed by the head. This causes an intensity difference in the perceived sound between the two ears and is a major factor in localizing sound sources. Directional localization accuracy is one degree for sources in front of the listener and 15 degrees for sources to the side. ILDs are mainly effective in a range above 1600Hz.

What role does the head-related outer ear transfer function (HRTF) play?

Rayleigh’s duplex theory and its described relationships play an essential role in the localization of sound. However, for example, if two acoustic sources are symmetrically placed on the right front and right back of the human head, they will produce equal ITDs and ILDs based on the duplex theory, which is called the cone model effect.

The same is true when the sound source is displaced vertically, up and down. Nevertheless, the human ear is able to actually distinguish these sources. Angle-dependent resonance phenomena, which occur at the outer ear, enable localization also in the vertical plane, and the shape of the auricle is decisive for the distinction between front and back.

In other words, HRTF describes the complex filtering effect of the head, trunk, and auricles that enable localization. The frequency-related elevations and depressions that are created by the outer ear and play an important role in front/back/top localization are explained in more detail in the directional bands by Jens Blauert.

The Blauert bands

The directional change in sound caused by Blauert’s bands can be electronically simulated to create a virtual perception of where an acoustic event is coming from. Multiple frequency ranges can be adjusted to create the effect of a sound source in front of, behind, or above the listener.

As shown below:

- Front: very present, close, direct, superficial – Can be achieved by boosting frequencies in a range of 300 to 400 Hz and 3 to 4 kHz and attenuating frequencies around 1 kHz.

- Rear (and top): diffuse, distant, spatial – Can be achieved by amplifying frequencies around 1 kHz.

In general, these increases and decreases are described in the directional bands of Blauert, although the state of the nulls in the resonance effects of an individual is very different.

Since for HRTF localization mainly stimulus patterns have to be trained, which are due to anatomical differences, a generalized function cannot be provided. Methods such as artificial head stereophony are also challenging because the difference with the listener’s head is usually large.

In loudspeaker reproduction based on phantom sound sources, the complex filtering characteristics of the human pinna lead to significant errors. The reason for this is that the angles of the incoming sound waves do not match the original sound field. One consequence of this phenomenon is large amplitude errors, which lead to a boost of the phantom source (center) in loudspeaker stereophony, among other things. The only remedy is to reconstruct the original sound field.

Wow, a “small” excursion into the world of spatial hearing in relation to 3D audio. Here the basis is formed and is essential for my daily work with 3D audio. But what great and outrageous things can you do with it?

Just ask me!

Get in contact